Terms

| Terms | Description |

|---|---|

| Execute Action Table | Executes an action file of Alpha2 |

| Voice Dictation | Transcribes speech in to text (STT) |

| Speech Synthesis | Synthesizes text into speech (TTS) |

| Semantic Understanding | Q & A |

| Syntax Recognition | word to active Voice Dictation of Alpha2 |

| Occupy and Release Microphone | When the robot is idle and has been occupying the microphone , the robot must release the microphone in order to allow a third-party developer to use the microphone. When the third party no longer needs the microphone, the robot can occupy the microphone again. |

About Integration

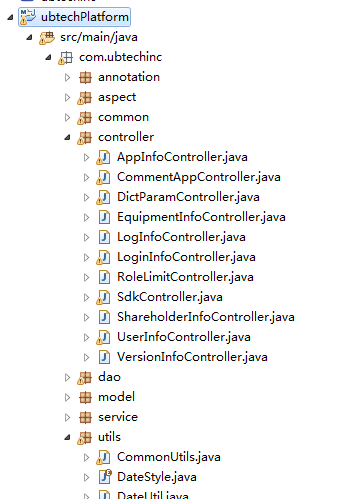

[2] Copy alpha2robotlib.jar and alpha2serverlibutil.jar to the directory “libs” of the Android project, as shown below.

| Parameter | Description |

|---|---|

| this | context of the application |

| APPID (exam:“123456”) | the unique identification of your program, need to apply when you create your program |

Initialise the object of voice service: mRobot.initSpeechApi(IClientListene, ISpeechInitInterface);

| Parameter | Description |

|---|---|

| IClientListene | The realization of client and interface connection, which is using in the callback of voice dictation result and TTS play completion. |

| ISpeechInitInterface | the realisation of interface initialisation, which is using in the callback of voice initialisation. |

ISpeechInitInterface:

public void initOver()

{ //initialise the object of

voice service and complete callback.

mRobot.speech_setVoiceName(“xiaoyan");//set up the

pronunciation

mRobot.speech_setRecognizedLanguage(“zh_cn");//set recognised

language

mRobot.speech_startRecognized(“");//start

voice recognization

}

IClientListene:

@Override

public void onServerCallBack(String text)

{

//callback of voice dictation result

}

@Override

public void onServerPlayEnd(boolean isEnd)

{

// callback of TTS play completion

}

//use the default pronunciation: Xiaoyan

mRobot.speech_StartTTS(“Hello, my name is Alpha, nice to meet you!”); //Pronunciation: Catherine

mRobot.speech_startTTS(“Hello, my name is Alpha", "Catherine");

voice dictation:

When the interface of IClientListene has realised, you can get the continuous voice dictation result of Robot within a period of time from the method onServerCallBack speech synthesis (TTS):

mRobot.speech_understandText("Hello",IAlpha2RobotTextUnderstandListener);

IAlpha2RobotTextUnderstandListener Robot The

interface of semantic understand callback require to implement

the following two methods

@Override

public

void onAlpha2UnderStandTextResult(String arg0)

{

//get the result of semantic, and broadcast out with TTS

mRobot.speech_StartTTS(arg0);

}

@Override

public void onAlpha2UnderStandError(int arg0)

throws

RemoteException {

//error code of semantic

understanding

}

Mic occupy and release

[2] Developer can continue to apply mic resource after complete voice function initialisation.

ISpeechInitInterface : public void initOver() { //apply mic resource after complete voice function initialisation mRobot.speech_SetMIC(true); //release mic resource when the running app exited mRobot.speech_SetMIC(false); }

Execute motion in motion list

[1] initialise object of motion listmRobot.iniActionApi(AlphaActionClientListener);

[2] execute motion

mRobot.action_PlayActionName(“ActionName”);

[3] stop motion

mRobot.action_StopAction();

Syntax recognition

[1] initialise object of syntax recognitionmRobot.speech_initGrammar(IAlpha2SpeechGrammarInitListener); IAlpha2SpeechGrammarInitListener: void speechGrammarInitCallback(String grammarID, int nErrorCode){ //callback of syntax initialisation nErrorCode ==0 //initialise successfully }

[2] execute Syntax recognitionmRobot.speeh_startGrammar(IAlpha2SpeechGrammarListener); IAlpha2SpeechGrammarListener: void onSpeechGrammarResult(String strResultType, String strResult) { //callback of the result of syntax recognition} void onSpeechGrammarError(int nErrorCode);

Entrance of third party development

[1] The third party application development must rely on our Robot to start, so start is a very important entrance. Developer must obey to[2] The third party applications must register a broadcast for monitoring the event whose action is ‘com.ubtechinc.closeapp’.

Developer should release all resources when monitoring the broadcast event. If these is other application occupy the microphone, you must first release the microphone resources.

mRobot.speech_SetMIC(false);

mRobot.releaseApi().

filter.addActionthis.getPackageName() ; Receiving part Bundle bundle = intent.getExtras(); TransferAppDat data = (TransferAppData) bundle.getSerializable("appdata"); byte[] ipData = data .getDatas();